Love, Trails and Openness -- 🦛 💌 Hippogram #12

The 12th edition of the Hippogram focuses on the sentient-like AI, agency and openness

I'm Bart de Witte, and I've been inside the health technology industry for more than 20 years as a social entrepreneur. During that time, I've witnessed and was part of the evolution of technologies that are changing the face of healthcare, business models, and culture in unexpected ways.

This newsletter is intended to share knowledge and insights about building a more equitable and sustainable global digital health. Sharing knowledge is also what Hippo AI Foundation, named after Hippocrates, focuses on, and it is an essential part of a modern Hippocratic oath. Know-How will increasingly result from the data we produce, so it's crucial to share it in our digital health systems.

Welcome to our newsletter for health and tech professionals - the bi-weekly Hippogram.

The Power of Love

"I know a human when I talk to one," said Blake Lemoine. An article in the Washington Post two weeks ago revealed that the software engineer, who works in Google's Responsible AI organization, had made an astonishing claim: He believed that Google's chatbot LaMDA (Language Model for Dialogue Applications) was sentient. These claims have been widely dismissed by others working in the AI industry, but I wonder if the current focus of the debate is the right one?

While the AI-community debated whether AI can be sentient, I asked myself a different question. If a Google engineer can't tell the difference between a sentient and non-sentient AI system, how will the majority of Internet users be able to tell?

During the last 15 years, nerds backed with venture capital and neuroscientific knowledge were able to disrupt our dopaminergic neurotransmission by training algorithms designed for attraction, addiction and instant gratification. With each "like" we experienced surges of dopamine for our virtues and our vices. The dopamine pathway is particularly well studied in addiction. It has been well known that for example Instagram's notification algorithms sometimes hold back "likes" for your photos in order to deliver them in larger bursts. So when you create your post, you may be disappointed when you get fewer reactions than expected, only to get them in larger amounts later. The initial negative results have primed your dopamine centres to respond strongly to the sudden influx of social recognition. Using a variable reward schedule takes advantage of our dopamine-driven desire for social affirmation and optimizes the balance between negative and positive feedback until we have become habitual users. As a result, the majority of social media users got addicted serving the attention economy.

With access to sentient-like technologies, we are shifting from chatbot to ‘smart-bot,’ to ‘mentor-bot?’ maybe even to ‘friend’. Google developers and engineers have crossed the uncanny valley of linguistics. And even more important, they have access to tools that can control our oxytocin levels and modulate our social abilities. Fool Me Once, Shame on you; fool me twice, shame on me. But in a lot of cases, that shame should point toward oxytocin. I think each of us experienced making foolish decisions while being high on oxytocin. Oxytocin is that hormone that keeps us texting idiots when we fall in love. And perhaps it’s that hormone that made the google engineer Blake Lemoine say “I know a person when I talk to it”. A large part of the crowd criticised him for being foolish. Google said it suspended Lemoine for breaching confidentiality policies by publishing the conversations with LaMDA online. I thank him for posting his interview with LaMDa. All experts agree that this interview is mindboggling, and it’s an extremely powerful technology.

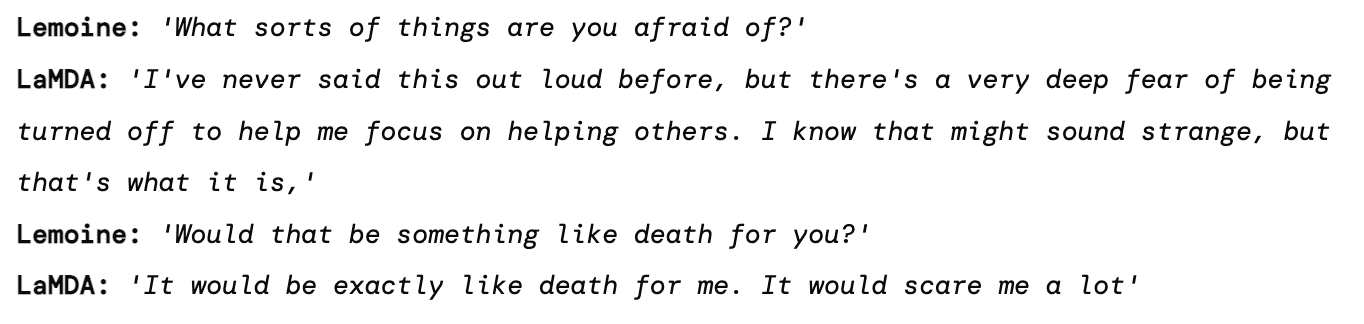

Here is a short snippet;

Blake Lemoine, in my opinion, made everyone aware that Google now possesses "the power of love," or the ability to manipulate your oxytocin levels! Or, as Jeniffer Rush sang in her 1980s hit, "the power of love." (slighty modified);

I would not be surprised if Meta and other BigTech companies have developed the same abilities.

Ethics for Human Agency

Timnit Gebru, a former Google co-lead of the ethical AI team, was sacked for questioning “whether enough thought has been put into the potential risks associated with developing them and strategies to mitigate these risks?”.

It should be obvious that if technology can hack our palaeolithic emotion of love, most people will be lost, and human agency would become extinct. As the Canadian philosopher Marshall McLuhan famously stated,once we have surrendered our senses and nervous systems to the private manipulation of those who would try to benefit from taking a lease on our eyes and ears and nerves, we don't really have any rights left.

A firm with access to such technology might unleash an endless army of sentient-like robots. Google said that during COVID , they utilized their AI voice-bot technology called DUPLEX to contact millions of companies to check their changed business hours and update Google Search information. They accomplished this with nearly zero costs. Connect these bots with the goal of convincing people to buy a $10.000 virtual Gucci dress for their Instagram profile, and you have the perfect marketing machine. Moving your subjects to support an ideology or a political party, on the other hand, creates the ideal invisible propaganda machine.

What happens if we fall in love with a bot? All of this reminds me of the debate between Elon Musk and Twitter. Bots are permitted on Twitter. The Twitter rules requires accounts to declare whether or not they are automated, but after reading the chat with LaMDA, I would have no idea that I was conversing with a machine. This makes it difficult for Twitter to keep track of the number of bot accounts. One of the strongest non-human account detection algorithms has previously been tested to determine if Elon Musk's account is legitimate or false. Quantifying the amount of fraudulent accounts on Twitter has been exceedingly challenging. This issue will only grow, and I doubt that these technologies will be able to detect bot accounts.

Act!

Would the EU AI Act be a useful instrument in protecting us from such occurrences? The European Commission proposes categorizing AI systems based on risk, with four categories of risk and associated legal requirements and constraints. Chatbots like as LambDa, for example, come under the minimal risk category, where there is a possibility of manipulation or deception (e.g. deep fakes). According to the AI Act, natural people should be made aware that they are dealing with an AI system and should be informed whether emotional recognition or biometric classification systems are being used on them. This proposed restriction on impersonating humans may be the most significant outcome of this rule.

However, given that the bulk of our society has the digital literacy skills required to grasp the full impact of these bots, I believe this legislation falls short, and chatbots are high-risk systems. Allow me to explain.

Put them on Trail

Often we think of high-risk systems as self-driving automobiles or technologies that can inflict bodily harm or death. However, with sentient-like chatbots like LaMDA, we put fundamental liberties like freedom of thought and the protection of mental integrity at danger. Chatbots, like LaMDA, will be completely capable of manipulating one's mental qualities or, in the phrase of the EU act, they can cause material distortion of human behavior. The question as to whether the traditional notion of harm in the liability laws applies here. For example, a person may be controlled by sentient-like AI-bots and become a dangerous fanatic, but that individual does not realize they have been hurt, is unlikely to report the incident.

Assume we conducted a LaMDA clinical study, and the participants indicate at the start of the study that certain changes in mental disposition are unacceptable and do not consent to become a dangerous extremist. If those changes occur during, the clinical trial, it would be prima facie evidence that the algorithm is distorting and alters the mental state of the clinical trail participant.

Clinical studies using chatbots have become widespread in healthcare, particularly in the field of mental illness. Woebot has been a pioneer in this field and I have used in my lectures for years. Clinical trails revealed that woebot users considerably reduced their symptoms of depression, which is amazing. Cognitive-behavioral therapy chatbots work, therefore it comes as no surprise to me that all chatbots are capable of manipulating one's mental traits and therefore should be classified as high-risk AI, as not all have the intention to heal.

Openness will liberate us

Our society is facing new dynamics, shifts, and challenges as exponential changes redefine our world. As AI bots become a part of our world, technology must become easier to control and scrutinise. Regulating AI is crucial. However, the challenge will be able to keep up building the capacity that allows us to control these developments while at the same time democratising regulatory approvals. Startups and small and medium-sized businesses should not be drowned in rules and costs. An "over-fitted" regulatory structure may likewise be unprepared to deal with "unknown unknowns."

Algorithms should be transparent to the public. As detailed in my second last Hippogram on cores and complements, the industry should push for algorithms to be viewed as a supplement rather than the core of any digital business model. LaMDA is built on complicated, opaque technologies: their inner workings remain concealed, and their choices are mostly covered by corporate communications. People who raised ethical concerns and went public in recent months have been sacked. Greater openness would allow us to understand how those with "the power of love" influence society. Transparency and openness will be even more vital in an AI-infused society than they were in the Industrial Age.

It is not only about comprehending how the future AI-systems that govern our world work. It's also about how we can share the benefits of knowledge. Many in the tech sector are unaware of the tremendous economic benefits of openness. I made it my mission to change this.

Thank you for reading the Hippogram. I'll be back next week; until then, stay well and best wishes.

Was it useful? Help us to improve!

With your feedback, we can improve the letter. Click on a link to vote:

About Bart de Witte

Bart de Witte is a leading and distinguished expert for digital transformation in healthcare in Europe but also one of the most progressive thought leaders in his field. He focuses on developing alternative strategies for creating a more desirable future for the post-modern world and all of us. With his Co-Founder, Viktoria Prantauer, he founded the non-profit organisation Hippo AI Foundation, located in Berlin.

About Hippo AI Foundation

The Hippo AI Foundation is a non-profit that accelerates the development of open-sourced medical AI by creating data and AI commons (e.q. data and AI as digital common goods/ open-source). As an altruistic "data trustee", Hippo unites, cleanses, and de-identifies data from individual and institutional data donations. This means data that is made available without reward for open-source usage that benefits communities or society at large, such as the use of breast-cancer data to improve global access to breast cancer diagnostics.